Google Data Center in Belgium (image source)

Google Cloud Platform (GCP) is a relatively new player on the cloud platforms market which entered it after Microsoft and Amazon (though we can argue about exact dates). With all of these three players we can see destinct market entry scenarios/starting positions.

In case of Microsoft Azure, anounced in October 2008, it was more about converting existing enterprise client base from use of on-premise versions of their products to their cloud counterparts, with an option to leverage hybrid approach gradually bridging or mixing their on-prem installations with their cloud offering options. I.e. Microsoft had established trust in an enterprise sector and in a way entering cloud market for them was more of a competition with their own on-premise products. Massive enterpise client base made it easier and harder and the same time in Microsoft’s scenario.

Amazon Web Services (AWS) platform, launched in 2002, was largely based on conversion of Amazon’s efficient and large infrastructure which they build on their own without using Microsoft or any other commercial software to run their e-commerce business – that evolved into something that some people call “fancy/specialized” blend of Linux cloud, but nonetheless it turned out to be an extremely successful and cost-efficient cloud platform. Partly we can attribute that to Amazon being free from the competition or alignment of their cloud offering with existing on-premise products of any kind and even more so thanks to an early entry to the market (it seems that here they repeated the same perfect market entry timing success story which they had with their e-commerce business and famous everything store). So in a way it is a pure cloud business from the very beginning which leveraged its pre-existing massive internal infrastructure as a starting point and had a pre-existing popular and well-recognized name in consumer market sector.

Google, somewhat similar to Amazon, had a huge popularity among consumers, and more over, it won its popularity with web applications offering basic yet slick and efficient services adopted by miriads of individual consumers worldwide. Entry to the cloud platforms market for Goole was about gaining enterprise clients trust / entering enterprise market and going beyond individual consumer use case scenarios. Their journey started with an introduction of Google App Engine offering in 2008, which subsequently, with another services added, become a part of GCP.

All of these cloud platforms mentioned above have common traits and advantages generic to cloud technologies: economies of scale with cloud inftastructures grown up or based off existing internal infrastructures and software used by vendors to run their own services, leading to cost efficiency difficult to beat constructing on-prem alternatives built from scratch; rapid provisioning and streamlined management; and all this combined with on-demand scalability and overal architecture which meets various compliance requirements (those tend to vary depending on industry and region).

Set and selection of features and services have a tendency to somewhat equalize between all vendors as everyone strives to offer all that client may need not allowing competition to be unique at least in “we have this service which they don’t” respect.

Of course there are differentiators in the available services and their implementations – most prominently in software and other intellectual property (IP) which shapes part of the cloud services visible to final customers (hardware side is also differentiated but it is mostly well abstracted away from consumers in line with cloud design principles). One of the salient points of GCP, which is worth mentioning, it is high access speeds which can be attributed to massive company investments into so called dark fibre back in 2005 (though of course that can vary based on your geographical location).

Let´s have a closer look at GCP. Historically, its beginnings can be traced back to Google App Engine which was announced in preview mode in April 2008. Later, in 2011, it was used by Snapchat developers who were somewhat intimidated by “complexity” of AWS (back then at least) and decided to use Google App Engine instead. Nowadays App Engine is just one of the many services included into GCP offering.

Starting from 2008 Google was steadily launching new services and adding features, and of course doing occasional acquisitions from time to time (Stackdriver and Firebase in 2014, Bebop in 2015, Kaggle in 2017, Xively in 2018).

In 2016 Google launched their annual cloud conferences (Google Cloud Next) to support building of cloud developers community around their platform.

“Why Google Cloud Platform?” – fragment of cloud.google.com main page

Google also invested into artificial intelligence (AI) and machine learning (ML) enabling use of computational techniques to allow computers work on behalf of humans (to some extent at least 😊). They also created custom ML hardware known as Google Tensor Processing Units (TPUs) used at Google to run such workloads as Google Search, Street View, Photos, and Translate. It was also used to win the game Go against human (AlphaGo versus Lee Sedol match which took place in March 2016). This TPU hardware can be now rented through Google Cloud (it was made available in beta in February 2018).

Most recent addition (from 2019) to GCP is Google Cloud Run – compute execution environment based on Knative.

Let’s look how GCP looks like at the end of 2019, almost after 12 years of evolution.

At the moment GCP main page lists more than 90 products grouped into the following categories: Compute, Storage and Databases, Networking, Big Data, Data Transfer, API Platform & Ecosystem, Internet of Things, Cloud AI, Management Tools, Developer Tools, Identity & Security and somewhat standing apart Professional Services category.

After looking at this list most of the people would not think that this is in anyway easier than AWS, though it probably looked much smaller back in 2011. As all cloud platforms strive to become all-encompassing and feature complete (as a consequence of extremely competitive market conditions), they inevitable end up with these enormous web consoles which desperately trying to bring it all together, which make ability to navigate around those branch of knowledge and an art in its own right. As for simplicity and cost it is all can be only evaluated for specific use case or service, with little catch that once you are enter into one vendor’s cloud you are heavily incentivized (if not forced at times) to stay and expand within it (and that’s another evaluation point to do while selecting cloud service for your project – interoperability and possibility of exit migration). But all in all, we can say, that the GCP is quite comprehensive feature wise being on par with other major players.

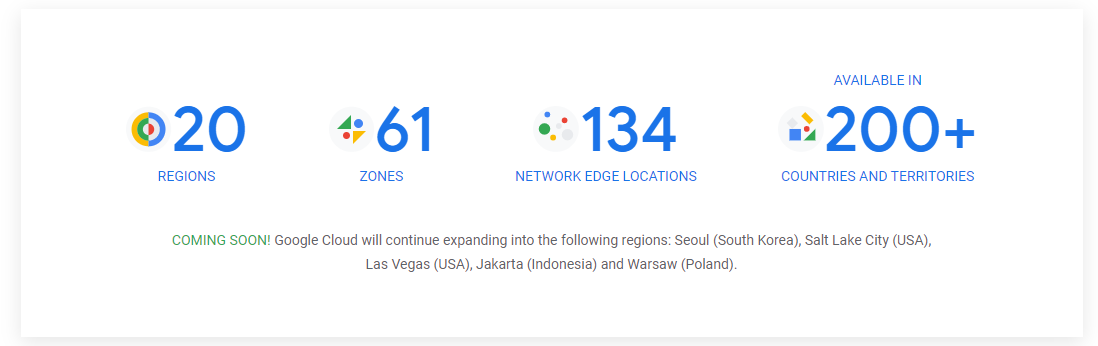

In terms of infrastructure scale and availability it is worth looking at the page – as you can see from on that page or on the screenshot below GCP currently has 20 regions, 61 zones, 134 network edge locations and available in 200+ countries.

GCP Locations (source)

Now, I would like to touch a little bit on specific GCP services, focusing on 3 major blocks of GCP technologies just to give you a better idea of what is available.

Compute (or Google Compute Engine). Main offerings here are virtual machines, scaling containers and orchestration. In this section we can find things such as:

- App Engine – Google App Engine (GAE) allows you to build and deploy applications on a fully managed platform. Essentially it is PaaS for hosting web applications, which can be compared with AWS Elastic Beanstalk.

- Compute Engine (VMs) – it allows you to create VM instances (new, from template of from marketplace). With Compute Engine VMs we have immediate visibility of monthly cost estimate at VM creation stage and VM types system replaced with CPU/memory allocation sliders available under Customize options for VM. That seems more human approach as you can simply speak about your VMs in terms of their specs instead of wrapping your head around potpourri of VM types named by vendor with use of “human-friendly” codes (approach we see in AWS and Microsoft Azure clouds).

- Kubernetes Engine (Containers) – Google Kubernetes Engine (GKE) allows you to create either individual Docker containers (virtualized apps) or clusters of those and use Kubernetes management service for the group of Docker containers.

- Cloud Functions (Containers) – allows you to create function for specific runtime with specific trigger and allocated amount of memory.

- Cloud Run (Functions) – this serverless compute option (can be compared with AWS Lambda) was introduced in 2019 can be considered as a middle ground between Kubernetes and Cloud Functions. It essentially allows you to bring your own container on a fully managed environment or in your own GKE cluster.

Storage and Database. Here we have object store, managed SQL, SQL data warehouse and NoSQL (various options).

Google Storage allows you to create buckets of different classes (multi-regional, regional, nearline and coldline) with ability to select location to meet regulatory or other requirements. Buckets allow you to store files.

Google Cloud SQL allows you to store structured data using fully managed database solution. It allows you to create MySQL, PostgreSQL, and SQL Server databases.

GCP data warehouse known as BigQuery allows you to run standard SQL queries on a serverless infrastructure and retrieve data both from files stored in Google Storage buckets and from BigQuery storage. It allows you to analyze all your batch and streaming data by creating a logical data warehouse over managed columnar storage, as well as data from object storage and spreadsheets and further create very fast dashboards and reports with the in-memory BI Engine.

Machine Learning. GCP considered to have quite strong AI services portfolio which includes ML and deep neural networks (DNNs) offerings. Amount of services which falls under ML umbrella is so big that it asks for dedicated blog post or even a number of those (and I will try to do that later). This huge block of services includes such things as:

- Dataprep – service for preparing data – upload files and building flows or “wrangling” data for further transformation

- Google Data Fusion – allows you to build more complex integration pipelines and can be used in scenarios where Dataprep functionality is not sufficient through creation Data Fusion instances of Basic or Enterprise types

- Google Data Studio – it can be accessed from BigQuery console using Explore in Data Studio link and allows you take data result of your query and visualize it in different ways. Alternatively, you can access it through datastudio.google.com page which provides you with interface for creating nicely designed reports with rich controls retrieving data from different sources (via Data Studio Connectors).

- AI Platform and Hub – it includes such components as SaaS APIs (vision, language, chatbot, classification regression) – in this case you just supply data and APIs handle data preparation, model selection and training and generate resulting prediction based on supplied data; next go PaaS and managed containers (Docker ML image) and IaaS VMs (custom ML Image).

- Jupyter Notebooks – allows you to create notebook instances (behind the instance compute instance VM with JupyterLab installed), Jupyter Notebooks are web applications which allow you to create and share documents that contain live code (Python 2/3), equations, visualizations, and text.

- Colab notebooks – Colab notebook allows you to use the same notebook as Jupyter Notebooks but with ability to run your code using hardware accelerator (GPU or TPU) and leveraging Google Docs collaboration features.

- Cloud Vision API – allows you to use Google’s pre-trained model to label images and detect faces, logos, and so on.

- Cloud AutoML Tables – can be used to create supervised ML models with your tabular data with support for various of data and problem types. Supported problem types: include binary, multi-class classification, regression.

- Data Science VM – provides VMs with preinstalled ML modules, use of VMs allow you more granular control over serving or updating ML model.

I think that is enough for an introductory GCP platform overview (otherwise it cannot be called introductory, right? 😊). I do have plans to write some more blog posts about GCP focusing on practical aspects of working with the platform and on its specific parts/service types. I hope this post was informative and thank you for reading. Stay tuned for new posts.

Related materials:

Views All Time

2

Views Today

2

The following two tabs change content below.

Filed under:

Services by Mikhail Rodionov